INSUBCONTINENT EXCLUSIVE:

In a recent article, Ars Technica's Benj Edwards explored some of the limitations of reasoning models trained with reinforcement learning

For example, one study "revealed puzzling inconsistencies in how models fail

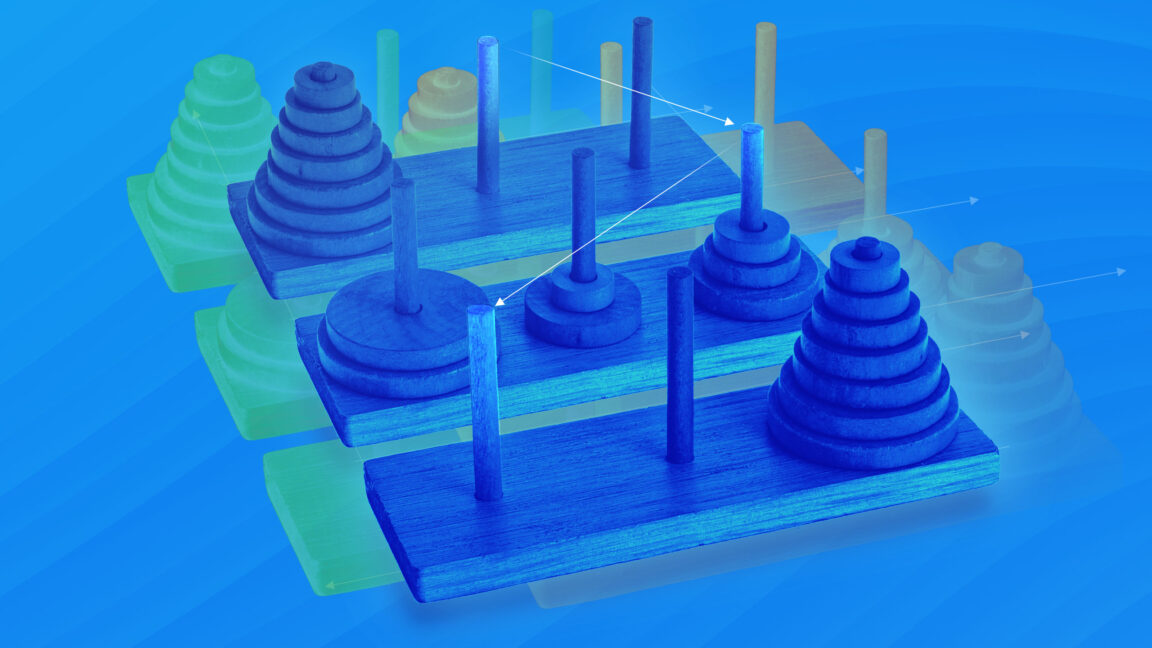

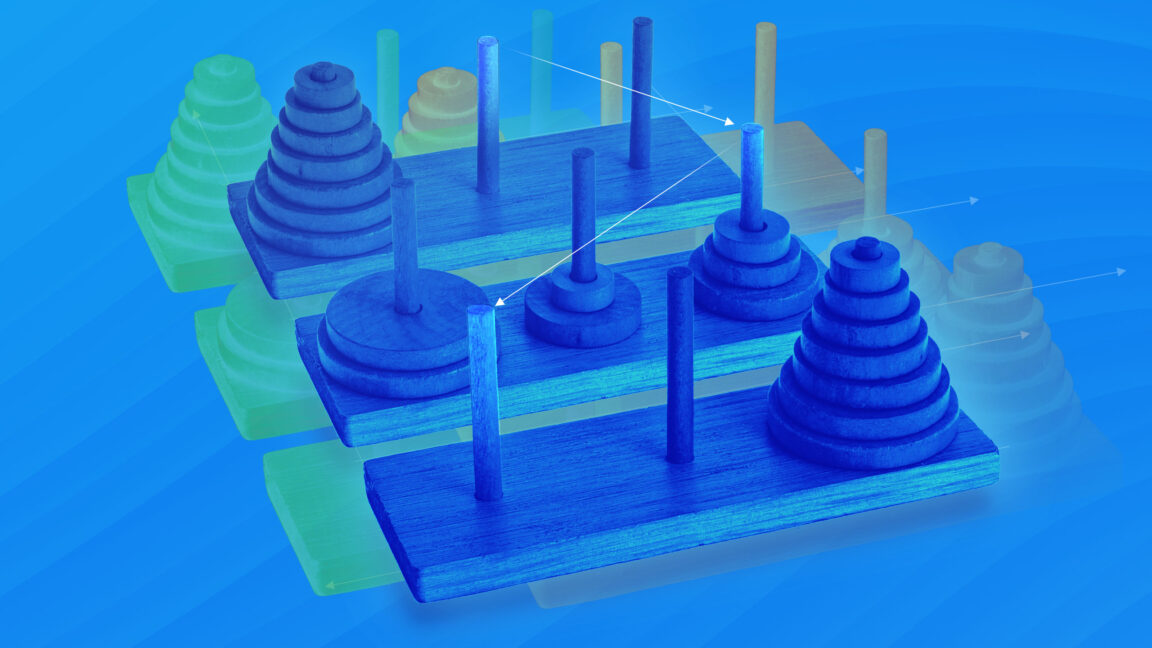

Claude 3.7 Sonnet could perform up to 100 correct moves in the Tower of Hanoi but failed after just five moves in a river crossing

system performs a keyword- or vector-based search to retrieve the most relevant documents

RAG systems can make for compelling demos

possible to develop much better information retrieval systems by allowing the model itself to choose search queries

can stay on task across multiple rounds of searching and analysis

LLMs were terrible at this prior to 2024, as the examples of AutoGPT and BabyAGI demonstrated

point applies to the other agentic applications I mentioned at the start of the article, such as coding and computer use agents

What these systems have in common is a capacity for iterated reasoning

They think, take an action, think about the result, take another action, and so forth.Timothy B

Lee was on staff at Ars Technica from 2017 to 2021