INSUBCONTINENT EXCLUSIVE:

Nvidia today announced its new GPU for machine learning and inferencing in the data center

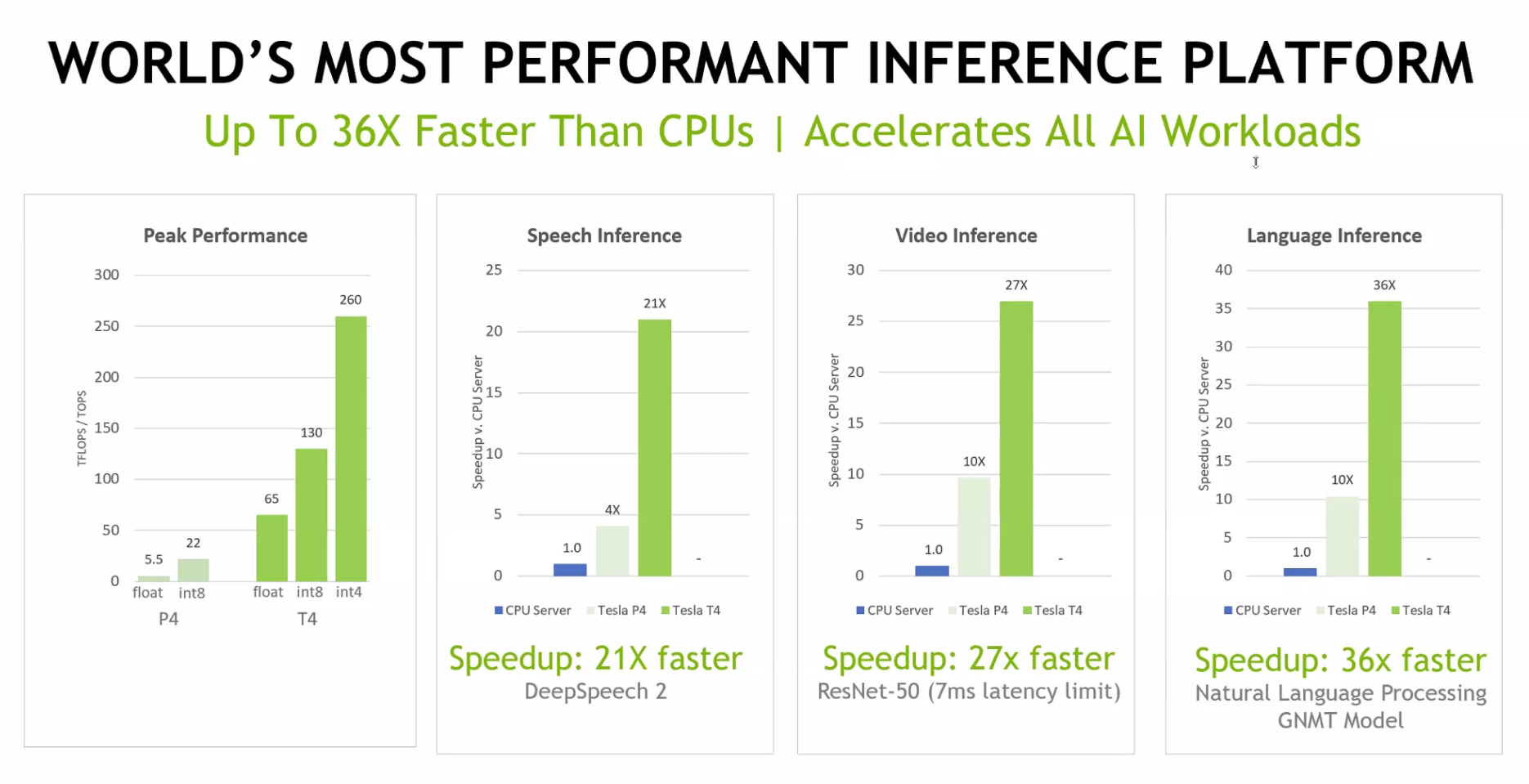

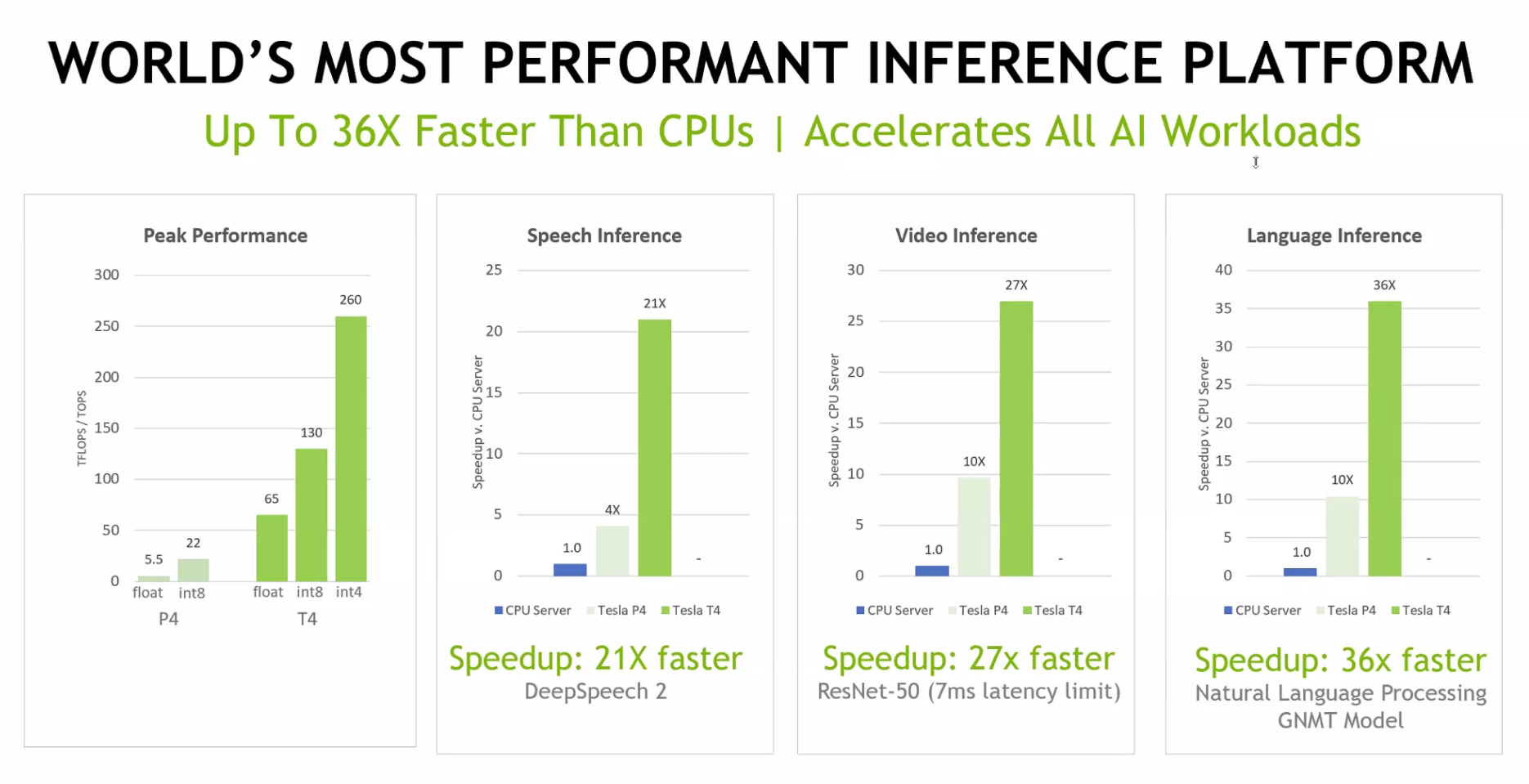

The new Tesla T4 GPUs (where the ‘T& stands for Nvidia new Turing architecture) are the successors to the current batch of P4 GPUs that

virtually every major cloud computing provider now offers

Google, Nvidia said, will be among the first to bring the new T4 GPUs to its Cloud Platform.

Nvidia argues that the T4s are significantly

For language inferencing, for example, the T4 is 34 times faster than using a CPU and more than 3.5 times faster than the P4

Peak performance for the P4 is 260 TOPSfor 4-bit integer operations and 65 TOPS for floating point operations.The T4 sits on a standard

low-profile 75 watt PCI-e card.

What most important, though, is that Nvidia designed these chips specifically for AI inferencing

&What makes Tesla T4 such an efficient GPU for inferencing is the new Turing tensor core,& said Ian Buck, Nvidia VP and GM of its Tesla

data center business.&[Nvidia CEO] Jensen [Huang] already talked about the Tensor core and what it can do for gaming and rendering and for

AI, but for inferencing — that what it designed for.& In total, the chip features 320 Turing Tensor cores and 2,560 CUDA cores.

In

addition to the new chip, Nvidia is also launching a refresh of its TensorRT softwarefor optimizing deep learning models

This new version also includes the TensorRT inference server, a fully containerized microservice for data center inferencing that plugs

seamlessly into an existing Kubernetes infrastructure.